Have I Been Encoded?

What does AI know about me and can I opt out?

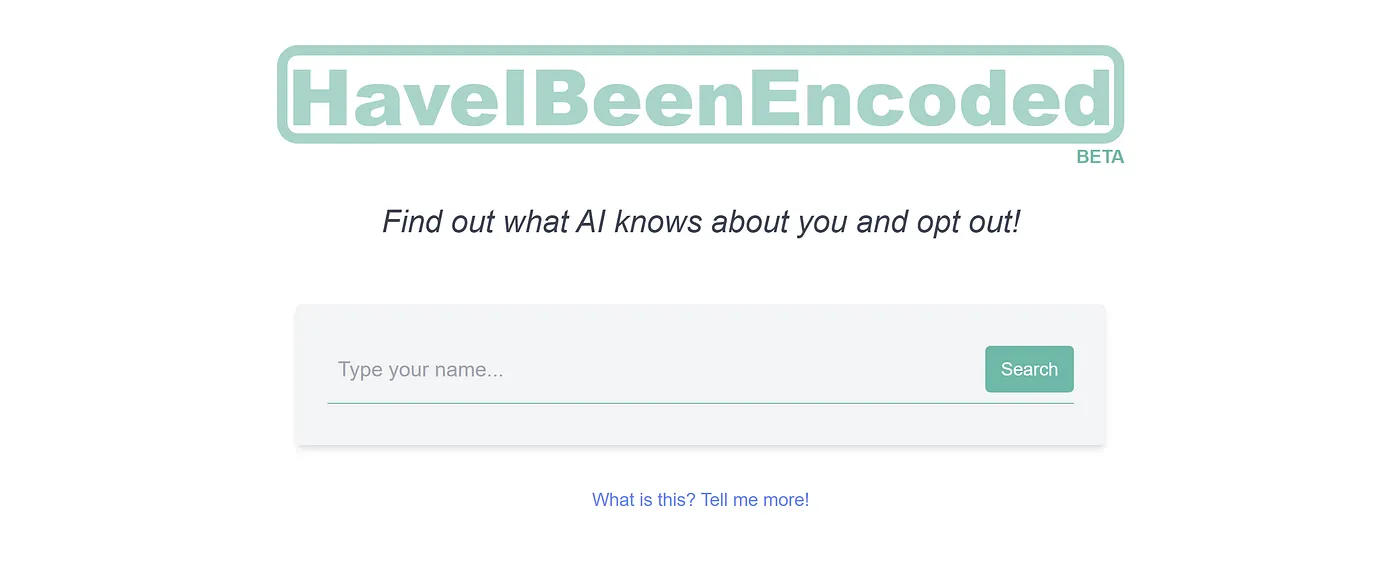

Hallucinating Large Language Models being incorporated into search engines should make anyone uneasy. Are you important enough to be encoded and how should you prepare? Try now at https://haveibeenencoded.com

It all started with an innocent ChatGPT question about the company I co-founded.

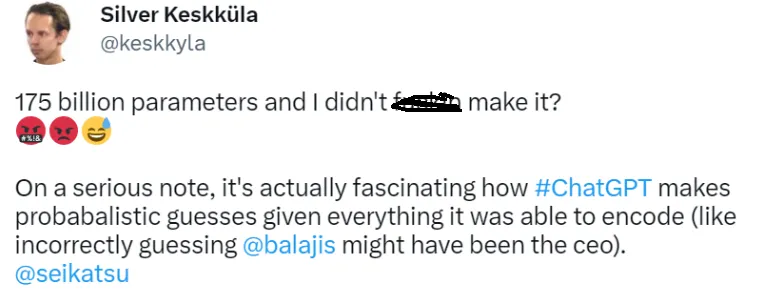

Despite being butt-hurt about not being important enough to be encoded I was intrigued by the nature of how Large Language Models encode information and generate output probabilistically.

I immediately wanted to know three things:

- Is my name encoded at all or when will it be encoded?

- Do I actually want to be encoded?

- Can I somehow opt-out in case I don’t want to be encoded?

Am I encoded or will I be encoded?

Although my name did not occur often enough in the training data to just answer a direct question about me, GPT-3 is still able to output my name when prompted with the right questions. It’s obvious that we will all be encoded as the model parameter sizes grow, so for me, the interesting question is WHEN is it my turn?

I polled my immediate network to see if anyone else was vain enough to ask ChatGPT about themselves and turns out it’s totally becoming the new "googling yourself". Every fifth person who has tried to use ChatGPT had asked about themselves

Since it wasn’t just me I decided to build a service to do regular polling of the OpenAI GPT-3 API and make it available for anyone at haveibeenencoded.com. I’m sure many of you will recognize the inspiration for the name as haveibeenpwned.com.

I also stumbled upon a site called haveibeentrained.com, which focuses on visual media and more specifically recent advances in stable diffusion. It allows artists to both search for their work being used in AI training data and to sign up to indicate that they do not consent. There are already signals that the industry has embraced this solution.

Seeing that someone came up with a similar solution for artwork it became obvious

I want to help individuals keep track of what AI models know about them (including Personally Identifiable Data) and support their efforts to requesting Big Tech companies to NOT include their data.

Why? Read on…

Do I want to be encoded?

Playing around with LLM you learn quickly how creative these models get. For example, ChatGPT knows that I am a person in the Estonian startup and technology sector, but will attribute all kinds of companies to my name despite the lack of truth behind it.

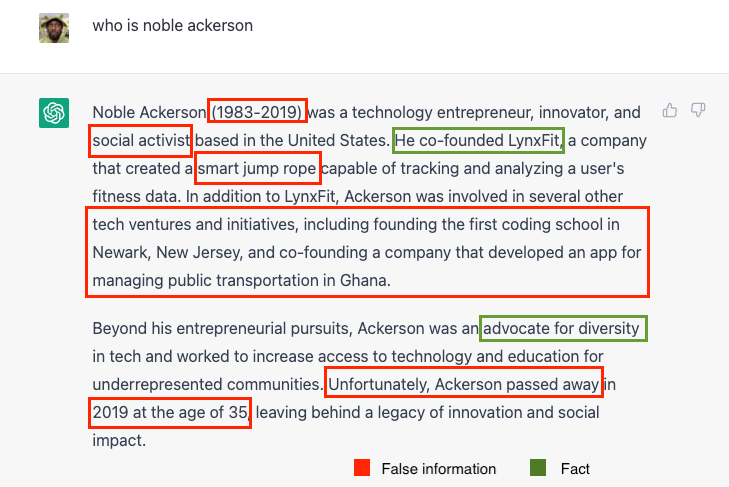

Since LLMs are incorporated into search engines as we speak, things will stop being funny when there is a risk that people start taking some of these creative outputs seriously. Here’s an example by someone that ChatGPT claims to be dead:

In fact, an LLM itself says it best:

The implications of googling yourself with language models like OpenAI’s GPT-3 can be significant. These models are incredibly powerful and can understand and generate human-like text, so when you google yourself with GPT-3, you may find information that appears to be written by a human but was actually generated by the model. This can include false or misleading information that could harm your reputation or cause confusion.

Another implication is that GPT-3 and other language models can generate information at a scale and speed that can be difficult for humans to keep up with, potentially leading to information overload and difficulties in determining what is accurate and what is not. Additionally, because language models can generate information on any topic, there is a risk of encountering content that is inappropriate or offensive, which could be harmful to your well-being.

In conclusion, while googling yourself with language models like GPT-3 can be interesting and provide a lot of information, it is important to be cautious about the information you find and take steps to verify its accuracy.

I’m starting with OpenAI’s GPT3 with an official API available and to illustrate how creative these models can get, but adding models and detecting change in answers will be a high priority.

Can I opt out?

At this point in time I’m not aware of any "easy" means to opt out as this technology is new. Technically speaking changing training data would be the slowest and least efficient fix while filtering inputs and outputs lays a realistic path.

In the European Union citizens enjoy the right to be "forgotten" and can request certain PII data to be removed from search engines. It is currently unclear how these laws will apply in the context of generative models, but we better sort this out before things get out of control.

The number of LLMs popping up will be substantial and it won’t make sense to request each one of them to NOT include your data, so figuring out the exact required legal steps and automation to make this super easy for anyone is a high priority. I’ve already reached out to my contacts at Google, Stability.ai, and Microsoft to make sure we start laying the path.

If you’re curious about where things are going with LLM’s in the domain that you know best — YOURSELF, give the free search tool a spin and let me know what you think?

Disclaimer: These are early steps in my path and HaveIBeenEncoded is using rather conservative parameters to generate more confident outputs from the model. Don’t be offended if you’re important and the models say they do not know you. It’s only a question of time when they do.